From Options Analysis to the Automated Measurement and Management of Architecture Debt

As a sofware engineer, it is a certainty that, having contributed to and reviewed technical or non-functional requirements, you’ll be called upon to provide robust solution option analysis along with costing considerations. In the interest of the robustness, or the thoroughness, of an options analysis, which one would expect in an engineering discipline, one technique you could leverage is the Architecture Tradeoff Analysis Method (ATAM). In this post I touch on ATAM, its use in industry and the rearview mirror views of one of its luminary authors, Rick Kazman, roughly 20 years later.

If a software architecture is a key business asset for an organization, then architectural analysis must also be a key practice for that organization. Why? Because architectures are complex and involve many design tradeoffs. Without undertaking a formal analysis process, the organization cannot ensure that the architectural decisions made—particularly those which affect the achievement of quality attribute such as performance, availability, security, and modifiability—are advisable ones that appropriately mitigate risks.

The Architecture Tradeoff Analysis Method (ATAM) is a technique for analyzing software architectures that was developed and refined in practice by the Software Engineering Institute (SEI) at Carnegie Mellon University. Notice that Carnegie Mellon permits unlimited distribution of the ATAM technical report, of course reading the material is the easy part.

Arguably presenting stakeholders with a thorough options analysis is the bedrock of servant leadership and the mere act of acknowledging the need to openly analyse architectural design tradeoffs, formally and in workshops, is a great step forward for many organizations. ATAM starts with the need to identify the quality attributes (often called non-functional requirements which I believe Rick Kazman describes as a dysfunctional term) of a software architecture. It then proceeds with techniques to providing insight into how quality goals interact with each other and crucially how they trade off against each other.

Its worth noting that the ATAM principles and practices can and should be applied when it comes to detailed design which may initially not be regarded as architecturally significant. Requirements and insights into them change rapidly, taking the time to analyse and review options is important at all levels. Arguably agile development practices such as XP are designed to facilitate high bandwidth design review however not necessarily at the level of enterprise or software architecture.

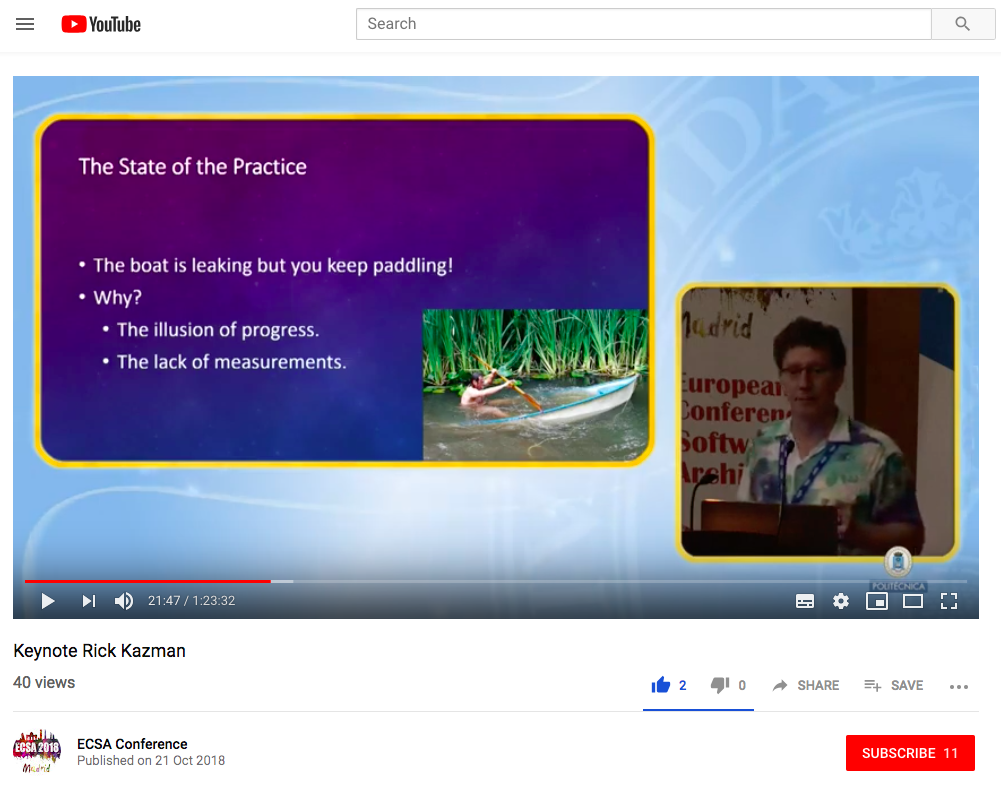

It’s worth considering why, if they are sensible, practices such as ATAM are, in my experience at least, not prevalent in industry. As Rick Kazman, one of the authors of the ATAM technical report from 2000, noted in his 2018 European Conference on Software Architecture keynote the reason why is because software engineering researchers have failed to factor in the practical and often difficult context(s) that software engineers experience in industry i.e. reality. It is telling that the title of his keynote is Measuring and Managing Architecture Debt and that he provides the following image to represent the state of the average software project in industry.

He goes on to state that we typically don’t measure the degree to which we are in debt with the debt occuring due to the incremental accumulation of architectural flaws. He goes on to define how he believes the incremental accumulation of architectural flaws take place.

Little tiny design mistakes that programmers make innocenty because they are busy doing their “real job” which is implementing features and fixing bugs.

The topic that he addresses is broadly speaking Architecture Health Management. Of course while there is the notion of incremental accumulation of architectural flaws there are also what I would call big bang manifestations of architectural flaws. These events could be never fit for purpose from the start or when a critical business decision gets made for example a pivot in terms of business strategy.

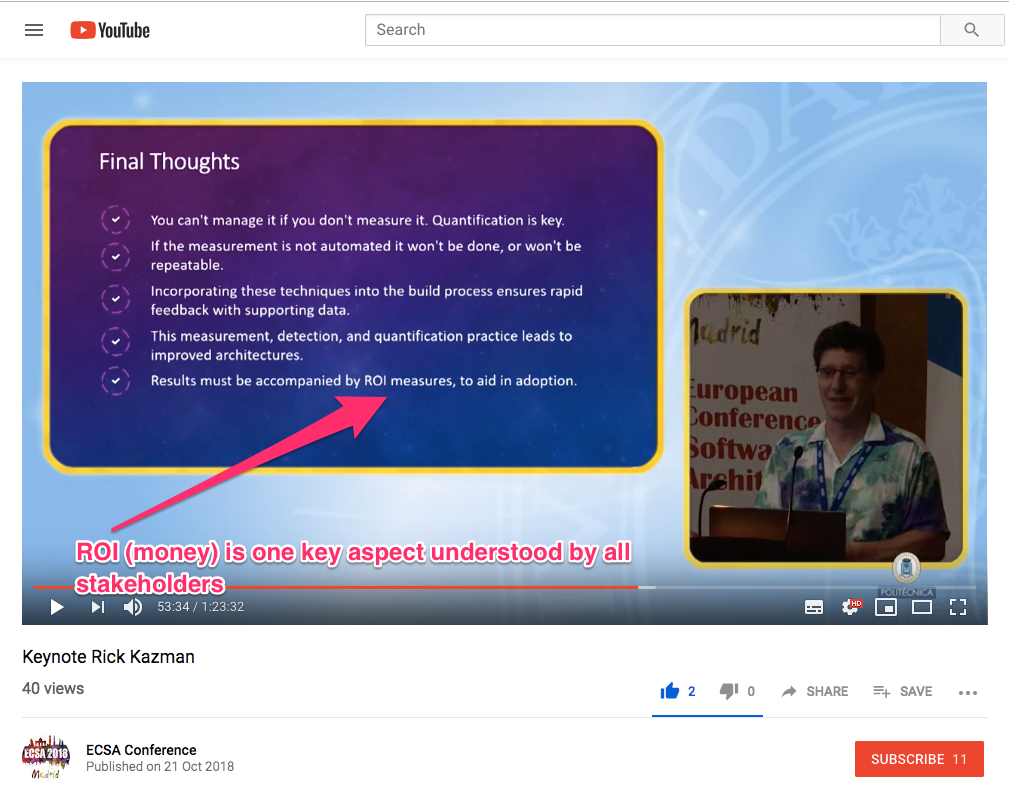

In any case, I highly recommend watching the cited keynote on Measuring and Managing Architecture Debt. Perhaps in time the automated identification and quantification or architectural and design issues (e.g. Decoupling Level measures) that burden projects with ever-increasing maintenance costs will become standard practice. It appears as if the notion is gaining traction with books such as Your Code as a Crime Scene being published in recent years.

It is worth noting that at the end of Kazman’s presentation a member of the audience asked about the use of libraries and the rise of functional programming and how that gets factored into automated architectural debt analysis which entails for example Decoupling Level metrics. Good questions but they still don’t take away from the benefit of giving architects measures to quantify what they often know in their gut, for example that a particular system may be hard to maintain or test. The resulting benefit being that it arms the architect with hard facts that can be taken to management in terms of why they should invest in refactoring (maintenance) rather than building new customer facing features.

Finally, Kazman provides an answer to a question posed about microservices and DevOps in terms of how relevant their work is to industry. His answer is that even though a depedency may only exist at runtime its still a dependency. So the challenge is the extract the same type of metrics (e.g. Decoupling Level) from these dynamic architectures but the principles still apply, the challenge is to figure out how to discern dependencies that exist at runtime.

A does not call B in a programming language sense, its not a method call or something, its gonna be a message, maybe its a pub-sub or through a broker, or through some other mechanisms. So, we need different extraction tools to determine the dependencies which are often only established at runtime. However, once we have that information, the analysis can proceed exactly the same. A dependency is still a dependency. And so if you have a cycle of dependencies, thats probably a bad thing, whether that was via method calls or via some runtime relationship.

His final caution on the value of automated analysis is telling with the context of students implementing design patterns incorrectly 75% of the time after a semester long course on design patterns. He’s basically saying that patterns are important and of great value but if you cannot analyse them automatically the flaws will just breed and grow.